Emerging UX Patterns for Generative AI Apps & Copilots

Charlene Chambliss

Senior Software Engineer

(NOTE: This post was originally published on Aquarium’s Tidepool blog. Aquarium has since been acquired by Notion.)

Why you should care about your app’s UX

LLM-powered applications, particularly those with chat interfaces, appear to have significant problems with user churn. For a multitude of reasons, including not understanding how to use the app, getting bad or unreliable results, or simply finding using the app too tedious to use, users often abandon these tools nearly as quickly as they came. Knowledge workers are tired of juggling multiple apps, and are looking for ways to pare down their tool stack to the bare minimum needed to get their work done.

In addition, the influx of cash into generative AI applications has created a heated market with significant competition in every vertical. Chances are, the problem your app is solving is also being tackled by multiple other companies, and everyone is in the early stages right now, meaning there is often rough feature parity between different apps solving the same problem.

On a personal level, I’ve tried dozens of LLM tools and apps over the last year or so, and only a handful have stuck with me and made it into my daily workflow. Those tools and apps all have standout UX that simply makes them easier and more pleasant to use than competing tools. Perhaps most importantly, the tools were nearly as easy to get started with and use as it was for me to stick to my existing workflow – there has been a definite payoff in productivity after an initial modest learning curve.

Not every pattern here will be applicable for every use case, but many of these apply across different environments as well as across different problems to be solved. Chances are, however, that your most engaged users have already been asking (directly or indirectly) for some of these features and conveniences. Pick and choose individual strategies to implement from these patterns, or use them as inspiration for a feature that may fit your tool even better, and over time you’ll have a darned good app.

We’ll be taking cues from some of the most successful AI-native and AI-enhanced tools currently on the market: Raycast, Arc Browser, Perplexity, Notion, Midjourney, GitHub Copilot, Sourcegraph Cody, Gmail, and our very own Tidepool.

Without further ado, here are eight patterns that make for great generative AI applications.

Have a previously-static box do double duty

Examples: Arc, Raycast AI

This nifty pattern allows users to continue using their existing workflows, with the option of exploring new functionality when desired, or when the “old” functionality doesn’t return any results.

Arc’s search box opened with familiar CMD+F doubles as both a search box and an integrated chat-with-this-webpage interface.

Arc’s CMD+F “find” box

Arc’s CMD+F “find” box

In addition to being perfectly usable for search, the box accommodates non-questions, such as a request to summarize this Wikipedia page:

Arc’s summary of DJ horsegiirL’s Wikipedia page

Arc’s summary of DJ horsegiirL’s Wikipedia page

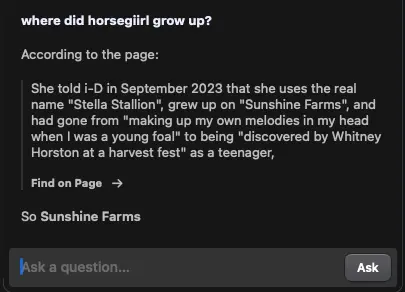

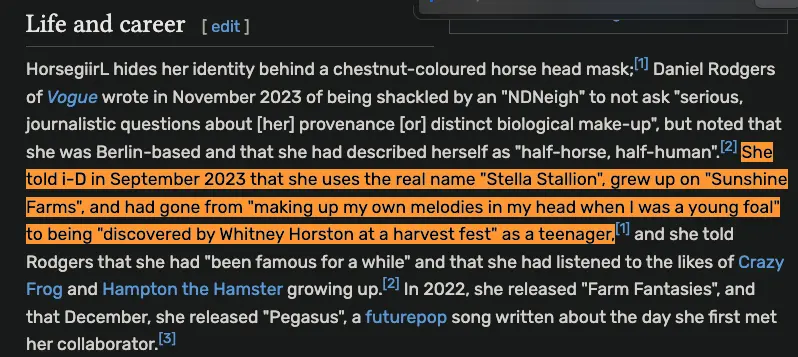

And when answering a question, the model has clearly been set up to provide a TL;DR for the answer, in line with the expectations of a user who originally just wanted to “Find” something:

Arc answering a question about the page

Arc answering a question about the page

Arc also integrates the generated answer directly back into the browsing experience; clicking “Find on Page” will scroll to and highlight the quoted text.

Arc highlighting the quote provided in the previous image after clicking “Find in Page”

Arc highlighting the quote provided in the previous image after clicking “Find in Page”

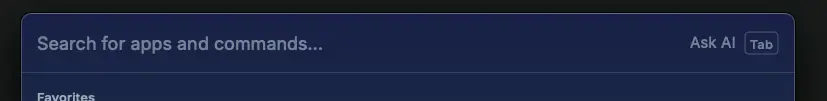

Similarly, Raycast has the ability to invoke Ask AI on any text you type into the app/command search field, providing convenient one-button access to answers, while also gently introducing users to a feature they may not have tried yet.

Raycast’s search bar doubling as an Ask AI entrypoint (Ask AI itself provides an entrypoint into a multi-turn AI Chat later, if desired)

Raycast’s search bar doubling as an Ask AI entrypoint (Ask AI itself provides an entrypoint into a multi-turn AI Chat later, if desired)

Turn common intents (prompt types) into commands

Examples: Sourcegraph Cody and GitHub Copilot, Notion

This pattern is great for users because it allows them to take a workflow that normally requires a lot of typing/setup and turn it into an invokable command, which can then be bound to a hotkey. This also helps to concretize “what you can do” with the tool, for users who aren’t used to natural-language interfaces and don’t yet have their own ideas.

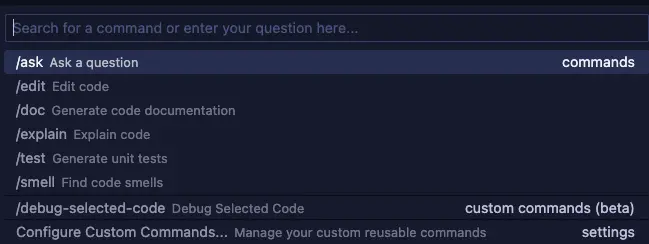

Sourcegraph Cody’s list of default commands (and one of my custom commands)

Sourcegraph Cody’s list of default commands (and one of my custom commands)

Bonus points: also group commands by type, making it easier to skim the list to find what the user is looking for.

A partial list of Notion’s AI commands, grouped by intent (write, generate, edit)

A partial list of Notion’s AI commands, grouped by intent (write, generate, edit)

Turn common prompt elements into knobs and settings

Examples: Midjourney, “AI Profiles” or system instructions as seen in ChatGPT, Perplexity, Raycast AI

This is a pattern commonly seen in image generation interfaces. When a text input box is the primary means of specifying what you want, repeatedly specifying certain settings and preferences can get tedious.

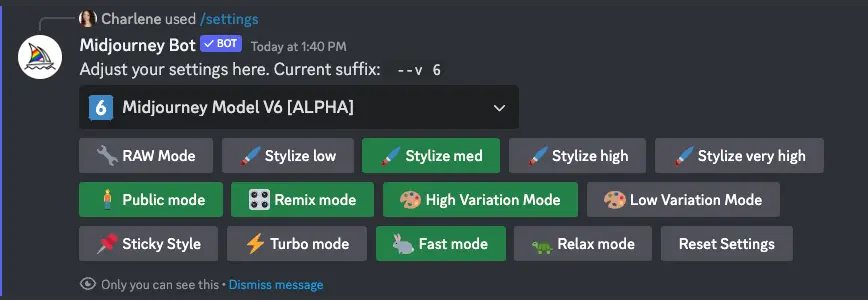

While Midjourney is currently working on an alpha of a fully-featured website interface for generating images with their model, they also offer similar functionality with the Discord bot. /prefer suffix can be used to attach a suffix to all generations performed after changing it, which allows easily reusing a particular phrase or a set of parameters (e.g. --ar 3:2 --chaos 20 --style raw --seed 6).

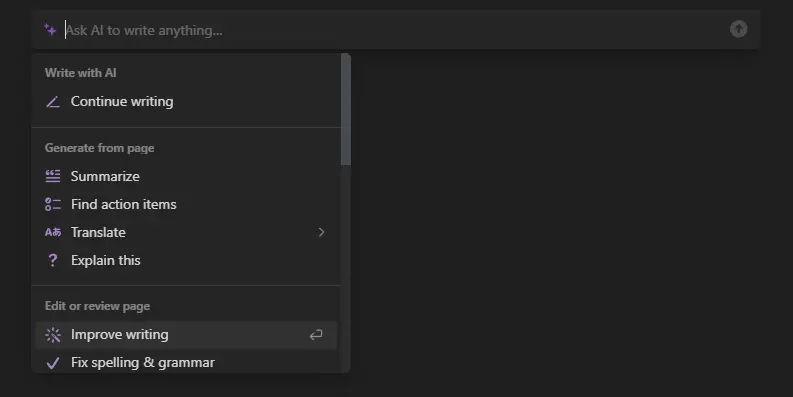

Some of these commonly tweaked options can also be changed via the /settingscommand, which provides convenient buttons.

Midjourney Bot’s /settings module

Midjourney Bot’s /settings module

This helps the user do less typing and copying and pasting. It’s even more important to have something like this for LLM apps on mobile platforms, where typing is several times slower for most people.

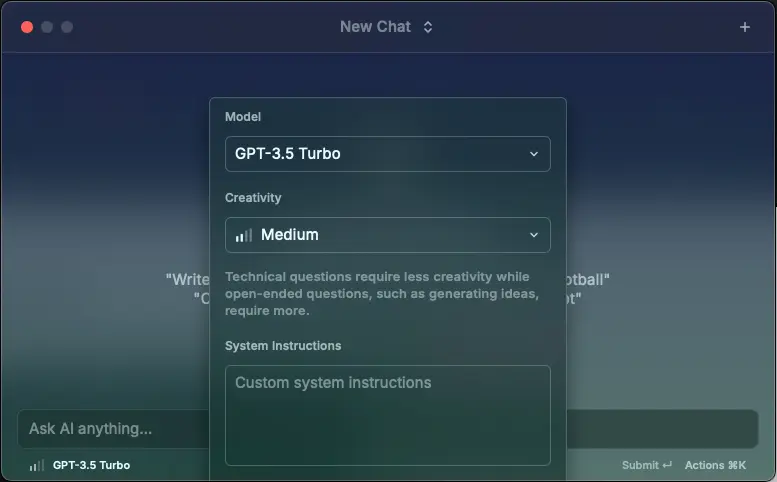

Another example is Raycast AI, which allows you to set system instructions on a chat-by-chat basis instead of only globally for your entire account. System instructions are typically used to set the subject or tone of a conversation, or to enforce rules about how the model should respond (“be concise,” “respond in Portuguese only,” etc.).

Raycast’s UI for changing conversation-level settings such as model, temperature, or system instructions

Raycast’s UI for changing conversation-level settings such as model, temperature, or system instructions

Start with suggestions

Examples: ChatGPT (and GPTs on the GPT Store), Perplexity, Tidepool, many others

“Blinking cursor syndrome” affects us all – when seemingly anything is possible, what do you try first? The best LLM apps have figured out that it helps new and experienced users alike to provide some suggestions on what can be done with the tool.

This technique increases stickiness / decreases likelihood of new users bouncing, and also doubles as a convenient method for introducing new features to repeat visitors, who may not be aware of what’s been updated since the last time they used it.

ChatGPT’s landing page, which provides several ideas for where to start (as well as a disclaimer about information accuracy)

ChatGPT’s landing page, which provides several ideas for where to start (as well as a disclaimer about information accuracy)

Perplexity’s Discover tab acts as sort of a news feed, giving users an entry point into what other people are curious about right now. Users can very easily start their own chats on the same topic, then wander in whatever direction they’d like.

Perplexity’s Discover tab, which includes many interesting news stories to start a conversation about

Perplexity’s Discover tab, which includes many interesting news stories to start a conversation about

Perplexity additionally places suggestions for “related” questions at the bottom of each answer, providing an easy way for the user to continue with the conversational thread without having to type out some of the more obvious follow-up questions.

Perplexity’s “Related Questions” component, which displays at the bottom of each answer

Perplexity’s “Related Questions” component, which displays at the bottom of each answer

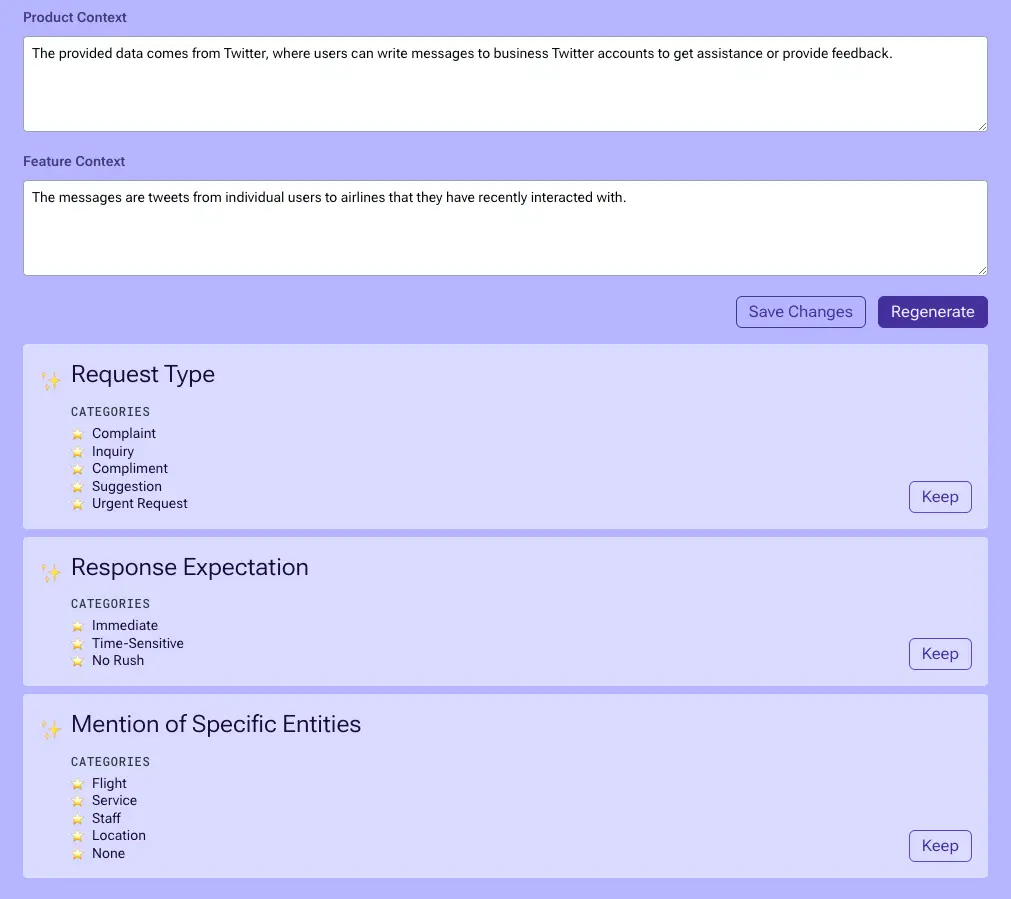

In our Quickstart Guide for Tidepool, we show off our Suggested Attributes feature. This feature takes in some context about the data itself (pictured here), as well as what kind of analysis the user is interested in predicting (not pictured), and uses it to detect Attributes that are correlated with target metadata, or otherwise aligned with the user’s preferences:

The bottom portion of Tidepool’s Suggest Attributes form, displaying the list of attributes that were detected in an airline support datase

The bottom portion of Tidepool’s Suggest Attributes form, displaying the list of attributes that were detected in an airline support datase

Make AI a supporting feature, not the main draw

Examples: Gmail and Google Docs autocomplete, GitHub Copilot, Notion

This can be a particularly good strategy for consumer apps which are used by a wide variety of demographics and which need to be very careful about changing anything about their existing design, for fear of angering or confusing longtime users.

My partner, who isn’t as interested in today’s AI shenanigans as I am, was typing into Gmail one day, and I watched as Gmail’s algorithm correctly predicted many words that he was typing, but he never accepted the suggestions. I asked, “Do you ever accept the suggestions that Gmail gives you?” and he said, “What?”

He, a daily user of Gmail for 10+ years, had not even noticed the suggestions as they were coming through, presumably because (a) he’s a fast typist and is generally just focused on finishing writing the email, and (b) the suggestions are actually quite subtle, only appearing as “shadow text” one or two words at a time, and are thus pretty easy to ignore.

Google Docs, correctly predicting what I was about to write in this post

Google Docs, correctly predicting what I was about to write in this post

Perhaps some might consider this a bit too subtle of an implementation, since even a daily user of Gmail didn’t realize what was happening there, but it is a surefire way to avoid angering existing users when introducing a potentially controversial feature. The feature is simultaneously easy to use while also being nearly impossible to misuse.

For pro users who do want to use the AI features all the time, add the ability to trigger the AI features at-will with an easy-to-reach command. For example, Copilot’s “automatic suggestion” behavior can be turned off completely, and users can simply invoke the command to trigger a completion when they do want some assistance. This can reduce feelings of the AI “getting in the way” by producing distracting or irrelevant suggestions that interrupt the user’s train of thought.

Notion does something similar by providing an unobtrusive shortcut to get to the AI features while the user is in a new block in page editing mode. Since people don’t typically begin a block with “space,” Notion can use a very easy-to-reach and easy-to-remember key with low risk of people triggering it accidentally.

Notion’s subtle UI hint about how to invoke AI commands

Notion’s subtle UI hint about how to invoke AI commands

Expand on or rewrite user input behind the scenes to produce a better result

Examples: ChatGPT + DALL-E 3, Tidepool

Anyone running an LLM pipeline (using LLM outputs for LLM inputs) has likely noticed by now that quality can vary drastically depending on user inputs. At best, the model produces a less-than-stellar answer, and at worst, your model could get jailbroken and produce unpredictable or problematic output. As such, it’s a good strategy to fence off user inputs from the rest of the prompt as much as possible, or perhaps not even use the user input directly.

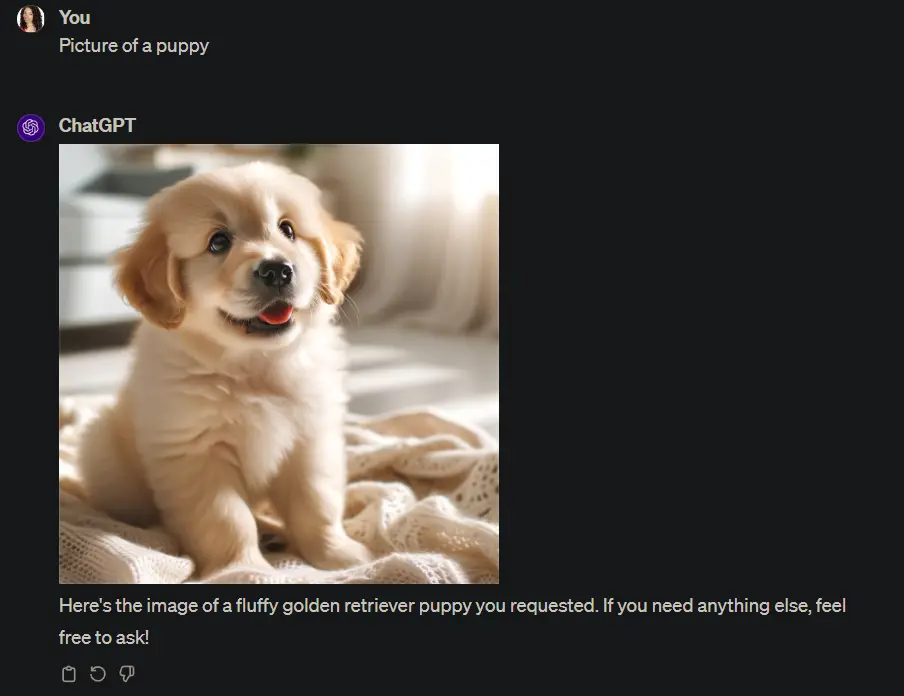

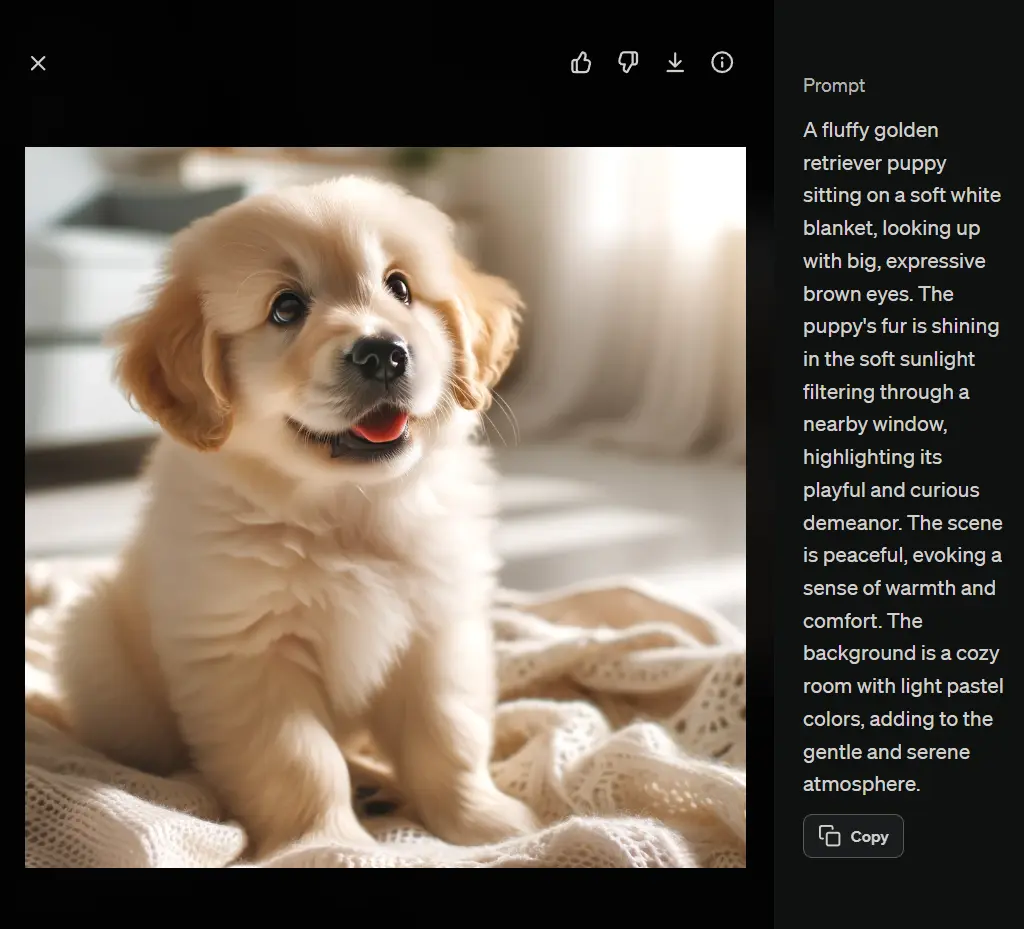

OpenAI uses this strategy to great effect with DALL-E 3. When a user enters a DALL-E 3 prompt to ChatGPT, the user’s prompt is rewritten behind the scenes, specifically in a way that significantly increases the detail of the prompt. Additional information is included, such as the desired focus and composition, the background and other objects in the scene, the lighting, and the vibe that should be evoked.

Generally, the “rewriter” step is instructed not to change any details provided by the user, but rather to augment the prompt with any details that don’t yet exist. The rewriter may also do things like fix errors (for example, reinterpret some text written by the user that appears to be a typo rather than their real intent). This helps shorten the feedback loop for users, who may end up with an unintended generation result after a long wait time otherwise.

Me asking DALL-E 3 for a “picture of a puppy,” with no further details

Me asking DALL-E 3 for a “picture of a puppy,” with no further details

The much longer and more comprehensive prompt that they rewrote it to in the background, shown on expanding ChatGPT’s response

The much longer and more comprehensive prompt that they rewrote it to in the background, shown on expanding ChatGPT’s response

We also leverage this technique in Tidepool: we take the initial description provided by the user about how the text data should be analyzed, and expand it into a more detailed labeling guide for the model behind the scenes, which allows us to create a high-quality zero-shot classifier for their desired analysis.

This technique should be used carefully, as some users could get annoyed by the model making assumptions about what they wanted. Be sure to use it in contexts where the result will not imply that your app is passing judgment on the quality, utility, or validity of the user’s original input.

Add affordances for selecting particular pieces of context

Examples: Perplexity, Sourcegraph Cody, GitHub Copilot

For use cases that rely on RAG (retrieval-augmented generation) to function effectively, retrieving the right context is extremely important. Oftentimes, the user can have a better sense of which context is required than whatever system is in place to automatically retrieve context based on the initial query.

For this reason, it’s a good idea to allow the user to selectively specify context that should definitely be included in the retrieved documents. This can augment the existing retrieval system and produce better answers than the model could have produced from only the automatically-retrieved documents.

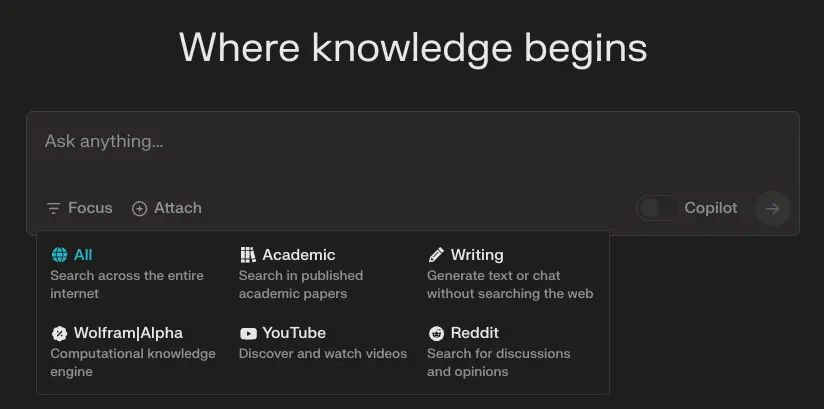

Perplexity has the ability to select a “focus,” for when the user preferentially wants the system to read from a particular source or set of sources. “Reddit” focus is one of my most-used focuses when I want real people’s experiences with something, because it will only reference search results from Reddit. Oftentimes, I prefer this in lieu of the more “curated” information coming from SEO-optimized review websites, which is what I would get with the default focus.

“Attach” also allows the user to directly upload a document of their choosing that will be exclusively referenced while generating an answer.

Perplexity’s “Focus” panel, allowing users to steer the search results referenced by the model

Perplexity’s “Focus” panel, allowing users to steer the search results referenced by the model

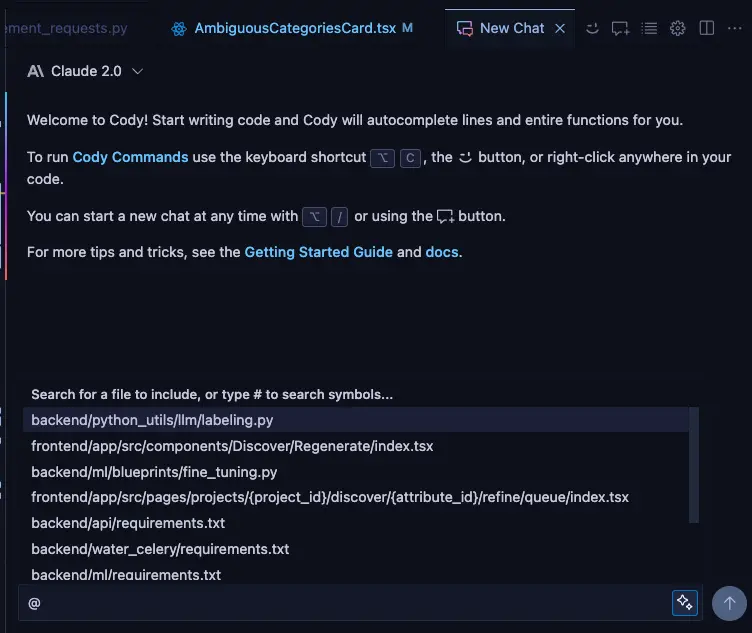

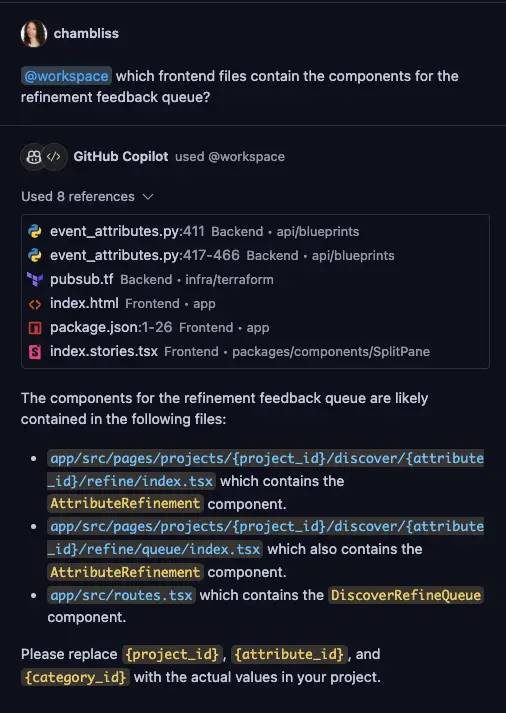

Sourcegraph Cody and Github Copilot both also provide examples of this. Copilot allows you to add @workspace to the beginning of your query to ensure Copilot answers your question while referencing context from your codebase.

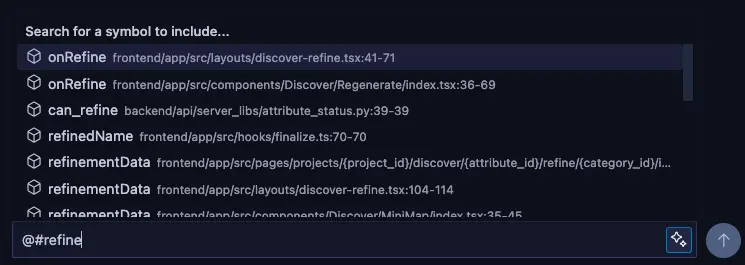

Cody goes even further, allowing you to use @ to include specific files, or even @# to include specific symbols (e.g. functions, classes, and so on) so that you don’t have to just cross your fingers and hope that the retrieval system finds the right context within your entire workspace.

Cody allowing me to add specific files to be referenced when answering my question

Cody allowing me to add specific files to be referenced when answering my question

Cody allowing me to include particular classes, functions, and variables when answering my question

Cody allowing me to include particular classes, functions, and variables when answering my question

Make verification and fact-checking easy for users

Examples: Arc, Perplexity, GitHub Copilot, Sourcegraph Cody

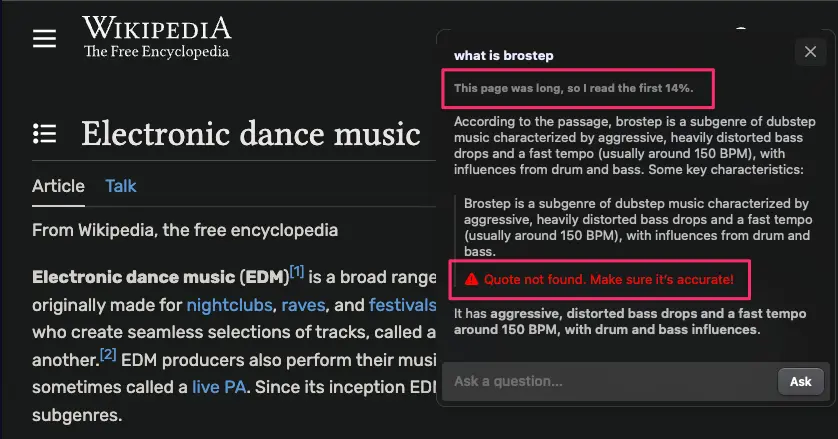

When asking Arc a question about a long page, it will actively warn you that it was only able to fit X% of the page into the model’s context window. And when clicking on “Find on Page” for a provided quote, if it’s not able to find that exact quote, it will warn you that the quote may have been hallucinated by the model.

Arc helpfully letting me know that the Wikipedia page for “Electronic dance music” was too long for it to review the whole thing, and additionally indicating that the quote provided in the answer may not be a real quote from the article

Arc helpfully letting me know that the Wikipedia page for “Electronic dance music” was too long for it to review the whole thing, and additionally indicating that the quote provided in the answer may not be a real quote from the article

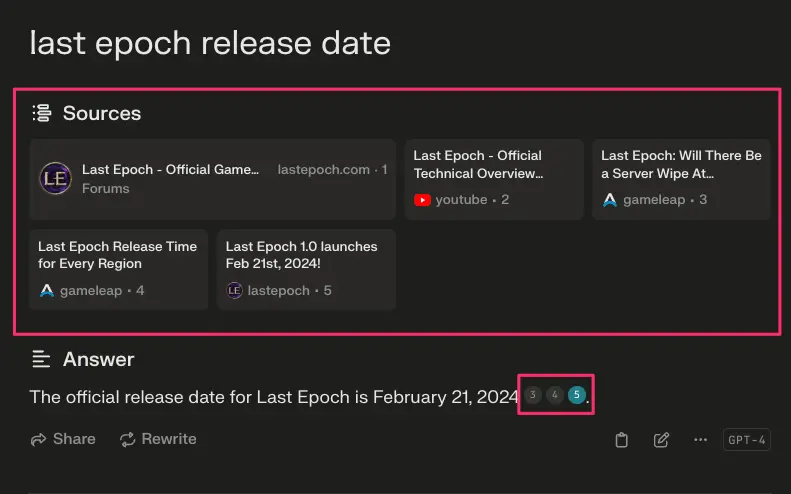

Perplexity is another obvious example, prominently providing the list of sources for questions, along with their inline citation numbers. We can see that this specific claim about the release date is purported to be present in at least 3 sources, making it perhaps more trustworthy than a claim with only one backing source.

Perplexity providing a source list and several inline citations for its answer.

Perplexity providing a source list and several inline citations for its answer.

The following example from GitHub Copilot is a particularly illustrative one for this UX pattern, because Copilot seems to have referenced none of the correct files for my question, and as a result produced a low-quality (although partially correct) answer. As a user, seeing that the referenced files are wrong helps me level-set my expectations as to how likely the model is to be accurate when answering my query. This helps users avoid wasting time due to taking false advice or information from the model.

Using the previously-mentioned @workspace shortcut to ask Copilot about our frontend codebase; Copilot returned a list of irrelevant files as its references, so I know I can disregard the answer

Using the previously-mentioned @workspace shortcut to ask Copilot about our frontend codebase; Copilot returned a list of irrelevant files as its references, so I know I can disregard the answer

Conclusion

We’re still only in the very beginning stages of developing LLM-powered interfaces, but there have been some early lessons learned in this first couple of years of building in earnest.

The most important themes here are to:

- Onboard gradually and unobtrusively, by integrating AI features into default workflows and giving gentle nudges and suggestions

- Empower the user to do the steering, by making it easy to invoke the AI features only when the user needs them

- Provide affordances to standardize common or frequent tasks, such as knobs, settings, and templates

With this mindset of progressively adding value to the user via suggestions, staying out of the way when they want to be in charge, and empowering pro users to customize the tool for their preferences, we can bring forth the kind of intuitive, empowering, time-saving workflows that the advent of generative AI promises to deliver.